Cybersecurity Snapshot: CSA Offers Tips for Deploying AI Securely, While Deloitte Says Cyber Teams’ GenAI Use Yields Top ROI

Check out the Cloud Security Alliance’s recommendations for rolling out AI apps securely. Meanwhile, a Deloitte survey found GenAI initiatives by cyber teams deliver the highest ROI to their orgs. Plus, the NSA urges orgs to combat GenAI deepfakes with content provenance tech. And get the latest on CISO trends; patch management; and data breach prevention.

Dive into six things that are top of mind for the week ending Jan. 31.

1 - CSA: Best practices for secure AI implementation

Looking for guidance on how to deploy AI systems securely? You might want to check out the Cloud Security Alliance’s new white paper “AI Organizational Responsibilities: AI Tools and Applications.”

Published this week, the paper covers three key areas: the security of large language models and generative AI applications; supply chain management; and additional implementation elements, such as employee use of generative AI tools.

Each of those three areas is analyzed according to six areas of responsibility for teams deploying AI systems:

- Evaluation criteria: To assess AI risks, organizations need quantifiable metrics. That way, they’ll be able to measure elements such as model performance, data quality, algorithmic bias and vendor reliability.

- RACI model: It’s key to be clear about who is responsible, accountable, consulted and informed (RACI) regarding AI decisions, selection of tools and vendor management.

- High-level implementation strategies: Teams should outline the process for integrating AI tools and applications into existing workows, and particularly how they’ll address challenges such as data integration and model deployment.

- Continuous monitoring and reporting: The paper recommends continuously monitoring elements such as AI tool performance and data drift, and regularly generating reports on AI system health and vendor compliance.

- Access control: It’s critical to implement strong access-control mechanisms to protect algorithms, data and AI infrastructure.

- Adherence to AI standards and best practices: Complying with AI standards, regulations and guidelines will help teams, for example, secure AI applications, conduct responsible AI development and mitigate supply chain risks.

“As AI technologies evolve and their adoption expands across industries, the need for strong governance, security protocols, and ethical considerations becomes increasingly critical,” Michael Roza, the paper’s lead author, said in a statement.

Some of the white paper’s key takeaways include:

- AI security requires addressing both traditional and AI-specific cybersecurity concerns.

- Effective third-party and supply chain management is critical.

- Organizations need clear policies and guidelines for employees’ AI use.

- AI governance requires clear role definitions and specialized skills.

For more information about AI security, check out these Tenable blogs:

- “Securing the AI Attack Surface: Separating the Unknown from the Well Understood”

- “6 Best Practices for Implementing AI Securely and Ethically”

- “How AI Can Boost Your Cybersecurity Program”

- “Never Trust User Inputs -- And AI Isn't an Exception: A Security-First Approach”

- “Harden Your Cloud Security Posture by Protecting Your Cloud Data and AI Resources”

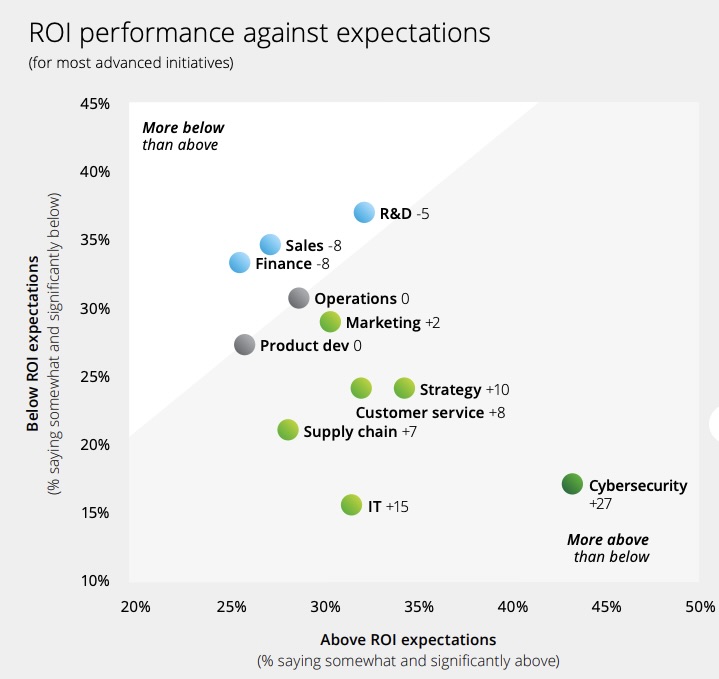

2 - Report: Cybersecurity use of GenAI produces highest ROI

As enterprises deploy generative AI across their business, cybersecurity initiatives are generating the highest return on investment (ROI).

Moreover, cybersecurity initiatives are more deeply integrated into work processes than initiatives from other departments, such as sales, research and development, and finance.

Those findings come from Deloitte’s “The State of Generative AI in the Enterprise: Generating a new future” report, based on a survey of about 2,770 directors and CxOs from organizations piloting or implementing generative AI.

“Relative to other types of advanced GenAI initiatives, those focused on cybersecurity are far more likely to be exceeding their ROI expectations,” reads the report from Deloitte, which polled respondents in 14 countries.

Specifically, the survey found that 44% of respondents' cybersecurity initiatives are delivering an ROI that is “somewhat or significantly above expectations.”

(Source: Deloitte’s “The State of Generative AI in the Enterprise: Generating a new future” report, January 2025)

While these findings reflect positively on how cybersecurity departments are deploying generative AI, the technology still faces adoption challenges in enterprises in general, the report notes, including:

- Regulatory uncertainty

- Risk management

- Data deficiencies

- Workforce issues

Specifically, regulatory concerns have become the top barrier for generative AI adoption, while almost 70% of respondents estimate that it’ll take their organizations more than a year to fully implement a generative AI governance strategy.

The report’s recommendations for successful adoption of generative AI in enterprises include:

- CxOs must ensure IT and business leaders work in tandem.

- Ensure generative AI initiatives deliver measurable ROI by, for example, focusing on high-impact use cases; establishing centralized governance; and continuously iterating.

- Plan for the eventual adoption of agentic AI systems, which can act with a high degree of autonomy, requiring little or no human intervention.

To get more details, check out the report’s announcement, the full report and this video of a panel discussion about the report:

For more information about using generative AI for cybersecurity:

- “GenAI’s Impact on Cybersecurity” (InformationWeek)

- “Generative AI in Cybersecurity: Assessing impact on current and future malicious software” (The Alan Turing Institute)

- “How Can Generative AI Be Used In Cybersecurity?” (eWeek)

- “Building a Generative AI-Powered Cybersecurity Workforce” (SANS Institute)

- “GenAI use cases rising rapidly for cybersecurity — but concerns remain” (CSO)

3 - How content provenance tech helps flag AI deepfakes

Organizations must get acquainted with a key technology designed to track the origin of media files and that way verify if they have been maliciously created or modified to spread falsehoods and misinformation.

That’s the message from the Australian, Canadian, U.K. and U.S. governments, which this week jointly published the document “Content Credentials: Strengthening Multimedia Integrity in the Generative AI Era.”

“Advanced tools that allow the easy creation, alteration, and dissemination of digital content are now more accessible and sophisticated than ever before,” the 25-page document reads.

“This escalation threatens organizations’ security, with AI-generated media being used for impersonations, fraudulent communications, and brand damage. Therefore, restoring transparency has never been more urgent,” the document adds.

The technology in question is called Content Credentials, and, according to the document, it’s in the process of becoming global ISO standard 22144. It tracks the provenance of media files by logging their creation and subsequent changes, and storing that information as encrypted, tamper-evident metadata.

Co-authored by the U.S. National Security Agency, the Australian Cyber Security Centre, the Canadian Centre for Cyber Security and the U.K. National Cyber Security Centre, the document seeks to:

- Explain how Content Credentials can provide media-provenance transparency

- Create awareness about how Content Credentials is being developed

- Recommend best practices for how to preserve provenance data

- Stress why broadly adopting Content Credentials is critical

The Content Credentials technical specification is developed and maintained by the Coalition for Content Provenance and Authenticity (C2PA) and implemented by the Content Authority Initiative (CPI).

For more information about Content Credentials:

- “The inside scoop on watermarking and content authentication” (MIT Technology Review)

- “Best Inventions of 2024: Content Credentials” (Time Magazine)

- “What are Content Credentials? Here's why Adobe's new AI keeps this metadata front and center” (ZDNet)

- “New technology to show why images and video are genuine launches on BBC News” (BBC)

- “Not Sure if an Image Is AI? Check Its Nutrition Label, Thanks to a New Adobe App” (Cnet)

VIDEO

Join the movement for content authenticity (Content Authenticity Initiative)

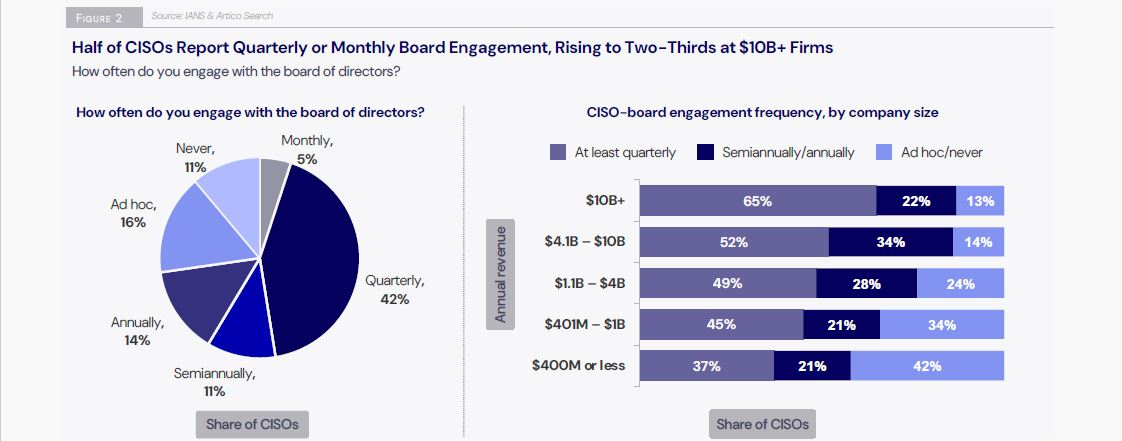

4 - Study: CISOs’ access to the board and CxOs is critical

Organizations where the CISO works closely with the board of directors and with fellow CxOs have stronger security programs than organizations where this collaboration is weaker.

In addition, CISOs with strong ties to their boards and CxOs tend to be happier at work and to earn more.

Those are two findings from the “State of the CISO 2025 Report” from IANS Research and Artico Search, based on a survey of 830 security executives.

“This report demonstrates that board engagement and C-suite access is critical in shaping the future of a security program and a CISO’s career,” Steve Martano, IANS Faculty and Executive Cyber Recruiter at Artico Search, said in a statement.

Yet, only 28% of survey respondents fell into the category of “Stragegic CISO,” defined as one with outstanding C-suite access and boardroom influence.

The majority – 50% – were deemed as “Functional CISOs,” who despite having “significant influence” nonetheless lack consistent visibility with the board or CxOs.

The rest – 22% – were classified as “Tactical CISOs” because they focus mostly on technology and have minimal interaction with the C-suite and the board.

(Source: “State of the CISO 2025 Report” from IANS Research and Artico Search,” January 2025)

Obviously, the recommendation is for all CISOs to rise to the category of “Strategic CISO,” as close communication and collaboration with the board and fellow CxOs -- including CFOs -- is essential to align the security program with the business strategy.

For CISOs to have optimal communication with board members and CxOs, the report recommends that they:

- Volunteer for projects and committees to explain to fellow business leaders the security angle of these cross-functional initiatives.

- Delegate tactical and operational tasks on team members so CISOs have more time for strategic work.

- Instead of limiting themselves to technical topics, CISOs should address strategic governance issues and that way act as a partner to the other CxOs.

To get more details, check out:

- The blog “Build CISO Strategic Impact and Visibility”

- The “State of the CISO 2025 Report”

- The announcement “IANS Research and Artico Search Unveil The State of the CISO, 2025 Report”

5 - Tenable: What are your patch management challenges?

During our recent webinar “From Reactive to Proactive: Expert Guide to Effective Remediation Automation,” we polled attendees about their struggles with patch management. Check out what they said.

(124 webinar attendees polled by Tenable, January 2025)

Check out the on-demand webinar to learn about actionable strategies and proven approaches for streamlining remediation, improving patching efficiency and reducing risk.

To learn more about patch management and vulnerability management, check out these Tenable resources:

- “Elevate Your Vulnerability Remediation Maturity” (white paper)

- “Context Is King: From Vulnerability Management to Exposure Management” (blog)

- “The State of Vulnerability Management” (white paper)

- “What is patch management?” (article)

VIDEO

Key Elements of Effective Exposure Response

6 - Data breach report: Many incidents preventable with standard cyber

Almost 200 data breaches that occurred in the U.S. last year -- including several of the largest ones -- could have been prevented via the use of well-known cybersecurity practices.

That’s one of the findings from the Identity Theft Resource Center’s “2024 Data Breach Report,” which was published this week and is the latest reminder of the importance of adopting foundational cybersecurity tools and procedures.

“A significant number of data compromises could have been avoided with basic cybersecurity,” ITRC President James E. Lee wrote in the report’s introduction.

Specifically, four of 2024’s “mega-breaches,” which collectively resulted in the issuance of 1.24 billion victim notices, were deemed preventable through cybersecurity processes and techniques, including:

- multi-factor authentication (MFA) or passkeys

- secure software software development

- vulnerability patching

- security awareness training

Here are other key findings from the report:

- The 3,158 U.S. data compromises recorded in 2024 fell 1% compared with 2023. Most of the incidents – 90% – were categorized as data breaches.

- Cyberattacks caused the majority – 80% – of data breaches. The rest were caused by system and human errors; physical attacks; and supply chain attacks.

- Victim notices skyrocketed 312% to 1.72 billion, driven by 2024's six “mega-breaches,” each of which generated at least 100 million notices.

- The hardest hit industry was financial services, followed by healthcare and professional services.

To get more information, check out the ITRC’s report announcement and the full report.

For more information about data security:

- “How To Protect Your Cloud Environments and Prevent Data Breaches” (Tenable)

- “Protecting Sensitive and Personal Information from Ransomware-Caused Data Breaches” (CISA)

- “Know Your Exposure: Is Your Cloud Data Secure in the Age of AI?” (Tenable)

- “Preventing data breaches” (Australian Cyber Security Centre)

- “Why data breaches have become ‘normalized’ and 6 things CISOs can do to prevent them” (VentureBeat)

- AI

- Cloud

- Exposure Management

- Risk-based Vulnerability Management

- Cloud

- Cybersecurity Snapshot

- Exposure Management

- Risk-based Vulnerability Management