Cybersecurity Snapshot: As OpenAI Honcho Hits Capitol Hill, Regulatory Storm Clouds Form Over AI La La Land

Check out what OpenAI’s CEO told lawmakers in Congress about regulating AI. Meanwhile, an upcoming EU law could cramp ChatGPT’s style. Also, the public’s nail biting over AI is real. In addition, a new AI chatbot may soon answer your burning questions about the dark web. And much more!

Dive into six things that are top of mind for the week ending May 19.

1 – Mr. Altman goes to Washington

Lively discussions around how much government control there should be over artificial intelligence (AI) products continue globally – and cybersecurity teams should closely monitor which way the regulatory winds are blowing.

The latest high-profile debate happened this week in Washington D.C., where Sam Altman, CEO of ChatGPT-maker OpenAI, testified before the U.S. Senate Judiciary Committee’s Subcommittee on Privacy, Technology and the Law.

After detailing benefits of OpenAI’s generative AI products and their privacy and security features, Altman told lawmakers that regulation is key to prevent AI from being misused and abused, but also cautioned against excessive government oversight.

“OpenAI believes that regulation of AI is essential, and we’re eager to help policymakers as they determine how to facilitate regulation that balances incentivizing safety while ensuring that people are able to access the technology’s benefits,” he said.

So what did Altman recommend to the U.S. Congress as it mulls AI laws and regulations? Here’s a quick rundown:

- A requirement that AI companies submit their products to not just internal but also external testing prior to releasing them, and that they publish the results

- Flexibility from the U.S. government to adapt its AI rules because AI technology is complex and evolves rapidly

- Collaboration and cooperation among governments globally as they draft laws and regulations

“We believe that government and industry together can manage the risks so that we can all enjoy the tremendous potential,” Altman said.

For more information, check out Altman’s prepared remarks; coverage from Gizmodo, Politico and The Guardian; and these videos:

Top Moments From ChatGPT Creator's Congressional Testimony (CNET)

OpenAI CEO Testifies Before Senate Judiciary Committee About Artificial Intelligence (Forbes)

2 – AI experts: Generative AI trips responsible AI programs

As organizations adopt generative AI technology, most of their responsible AI (RAI) programs are unprepared to address the risks involved. At least, that’s the opinion from the majority of AI experts consulted by MIT Sloan Management Review and Boston Consulting Group.

Most RAI programs are unprepared to address the risks of new generative AI tools

(Source: MIT Sloan Management Review, based on a poll of 19 AI experts.)

The panel of experts identified three main reasons for why organizations struggle with managing the risks of generative AI tools:

- These tools are “qualitatively different” from other AI wares

- These tools introduce new types of AI risks

- RAI programs can’t keep up with generative AI advancements

Still, there’s hope, according to the MIT Sloan Management Review article titled “Are Responsible AI Programs Ready for Generative AI? Experts Are Doubtful.”

“Many of our experts asserted that an approach that emphasizes the core RAI principles embedded in an organization’s DNA, coupled with an RAI program that is continually adapting to address new and evolving risks, can help,” reads the article, which outlines detailed recommendations.

For more information about responsible AI:

- “8 Questions About Using AI Responsibly, Answered” (Harvard Business Review)

- “Responsible AI at Risk: Understanding and Overcoming the Risks of Third-Party AI” (MIT Sloan Management Review)

- “CSA Offers Guidance on How To Use ChatGPT Securely in Your Org” (Tenable)

- “How AI ethics is coming to the fore with generative AI” (ComputerWeekly)

- “ChatGPT Use Can Lead to Data Privacy Violations” (Tenable)

3 – Surveys: AI gives most in the U.S. the heebie jeebies

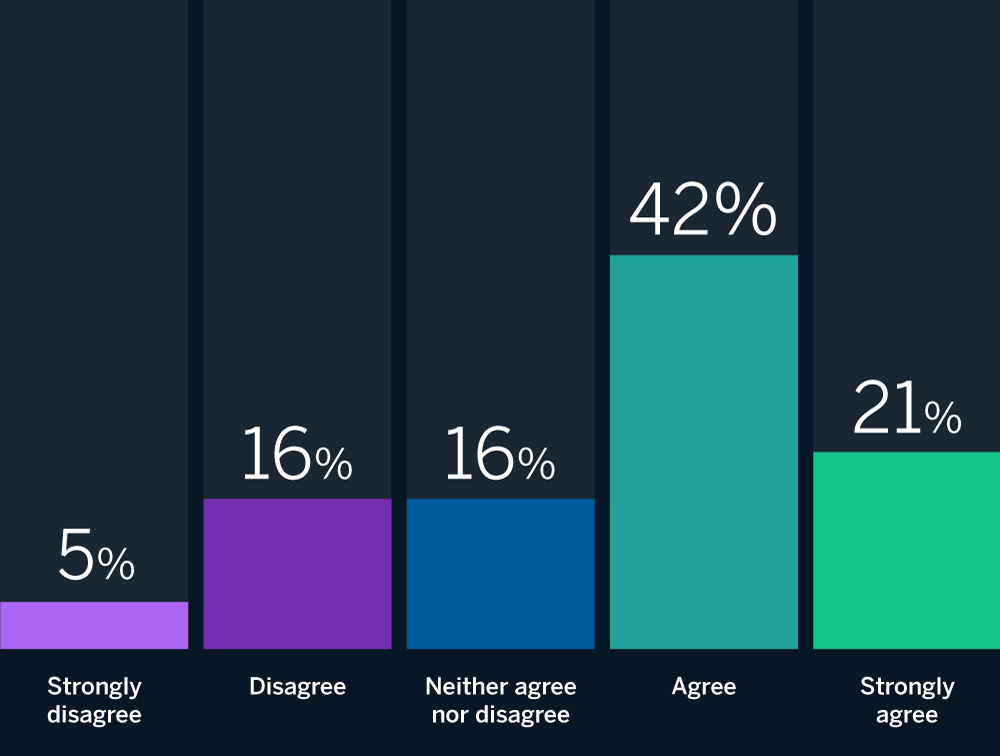

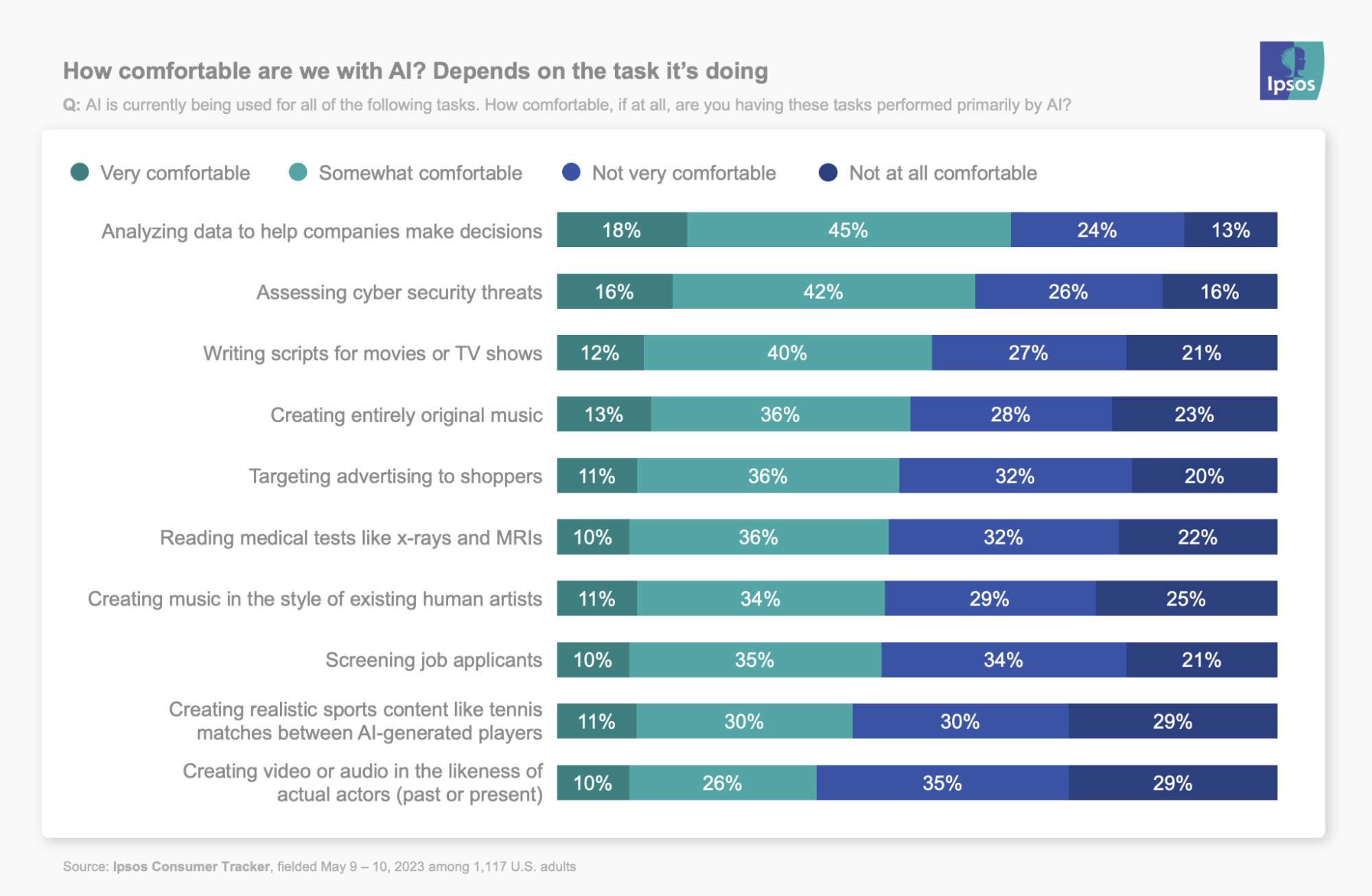

AI has many people in the U.S. concerned – even when the technology is used for cybersecurity defense purposes.

In an Ipsos online poll of 1,117 U.S. adults released this week, only 16% said they’re “very comfortable” about the use of AI for assessing cybersecurity threats. The rest rated themselves as “somewhat comfortable” (42%), “not very comfortable” (26%) and “not at all comfortable” (16%.)

Ipsos also found that almost half of respondents (46%) feel that AI tools are being developed too quickly, while 30% said they’re being developed at the right speed.

Meanwhile, a separate Reuters / Ipsos poll of 4,415 U.S. adults conducted online and also released this week found that:

- 61% of respondents said that AI “poses risks to humanity,” compared with only 22% who disagreed and 17% who didn’t express an opinion

- More than two-thirds of respondents are concerned about AI’s negative effects

Similar misgivings about the negative impact of AI also surfaced in another survey with a narrower scope. In an online poll of more than 400 readers of Boston.com, only 15% declared themselves “unconcerned” or optimistic about AI, while the rest felt a level of concern – 27% had “some concerns,” 40% described themselves as “fairly worried” and 18% said they were “close to panic.”

For more information about public perception of AI:

- “Limited enthusiasm in U.S. over AI’s growing influence in daily life” (Pew Research Center)

- “MITRE-Harris Poll Finds Lack of Trust Among Americans in AI Tech” (MITRE)

- “Why are we so afraid of AI?” (The Washington Post)

- “ChatGPT Thinks Americans Are Excited About AI. Most Are Not.” (FiveThirtyEight)

- “Americans Support Congressional AI Action” (Tech Oversight Project)

4 – The EU’s AI law could rock ChatGPT’s world

As we monitor the establishment of legal and regulatory guardrails around AI globally, a major development will be the enactment of the European Union’s AI law, whose approval process marches on.

Called the Artificial Intelligence Act, the law, among other things, will likely force some AI vendors like ChatGPT’s OpenAI to prove that their products’ training-data foundation doesn’t violate copyright law.

In an announcement last week, the European Parliament touted the latest progress for the AI law and outlined key recent additions and changes to its draft, including specific provisions aimed at generative AI products.

“Generative foundation models, like GPT, would have to comply with additional transparency requirements, like disclosing that the content was generated by AI, designing the model to prevent it from generating illegal content and publishing summaries of copyrighted data used for training,” reads the statement.

For more information:

- “Europe takes aim at ChatGPT with what might soon be the West’s first A.I. law” (CNBC)

- “The EU and U.S. diverge on AI regulation” (Brookings Institution)

- “Massive Adoption of Generative AI Accelerates Regulation Plans” (Infosecurity Magazine)

- “EU lawmakers back transparency and safety rules for generative AI” (TechCrunch)

VIDEOS

AI regulation and privacy: How legislation could transform the AI industry (Yahoo Finance)

The EU's AI Act: A guide to understanding the ambitious plans to regulate artificial intelligence (Euronews Next)

5 – Hello DarkBERT, my new friend

Do you have questions about the dark web – that sinister, creepy side of the internet where lawlessness reigns? DarkBERT may soon be here to help.

The generative AI chatbot is the brainchild of a group of South Korean researchers who wanted to provide a large language model (LLM) that could assist with cybersecurity research into the dark web. They trained DarkBERT on this seamy part of the internet by crawling the Tor network.

“We show that DarkBERT outperforms existing language models with evaluations on dark web domain tasks, as well as introduce new datasets that can be used for such tasks. DarkBERT shows promise in its applicability on future research in the dark web domain and in the cyber threat industry,” the researchers wrote in their paper about the AI chatbot.

DarkBERT isn’t available yet to the public. Its creators plan to release it at some point, but they haven’t specified a date.

For more information:

- “DarkBERT is the AI that deciphers the language of the dark web” (Crast)

- “Scientists Train New AI Exclusively on the Dark Web” (Futurism)

- “Dark Web ChatGPT Unleashed: Meet DarkBERT” (Tom’s Hardware)

- “Meet DarkBERT, The Only AI Trained On The Dark Web” (IFLScience)

- “New AI DarkBERT is trained on the Dark Web” (Dexerto)

6 – CIS white paper tackles IoT security

The Center for Internet Security (CIS) has just published the white paper “Internet of Things: Embedded Security Guidance” with the goal of helping IoT vendors more easily build security into their products by design and by default.

CIS Chief Technology Officer Kathleen Moriarty, the white paper’s author, explains in a blog that the 74-page document provides prescriptive recommendations and vetted research from IoT experts, and that it can be used not only by IoT vendors but also by their customers.

“If you’re an organization, you can begin the conversation with your vendors on embedded IoT security requirements for your products. You can specifically use this document as an educational tool and potentially provide it as a guide for the vendor,” she wrote.

For more information about IoT security:

- “IoT Security Acquisition Guidance” (CISA)

- “Ten best practices for securing IoT in your organization” (ZDNet)

- “4 advanced IoT security best practices to boost your defense” (TechTarget)

- “Securing the IoT Supply Chain” (IoT Security Foundation)

- “NIST cybersecurity for IoT program” (NIST)

VIDEO

Do IoT Devices Make Your Network Unsecure? (Cyber Gray Matter)

- Cloud

- Exposure Management

- Risk-based Vulnerability Management

- Center for Internet Security (CIS)

- Cloud

- Cybersecurity Snapshot

- Exposure Management

- Risk-based Vulnerability Management