Cybersecurity Snapshot: CISA Tells Tech Vendors To Squash Command Injection Bugs, as OpenSSF Calls on Developers To Boost Security Skills

Check out CISA’s call for weeding out preventable OS command injection vulnerabilities. Plus, the Linux Foundation and OpenSSF spotlight the lack of cybersecurity expertise among SW developers. Meanwhile, GenAI deployments have tech leaders worried about data privacy and data security. And get the latest on FedRAMP, APT40 and AI-powered misinformation!

Dive into six things that are top of mind for the week ending July 12.

1 - CISA: Eradicate OS command injection vulnerabilities

Technology vendors should stamp out OS command injection bugs, which allow attackers to execute commands on a victim’s host operating system. So said the U.S. Cybersecurity and Infrastructure Security Agency (CISA) and the FBI in an alert published this week.

“OS command injection vulnerabilities have long been preventable by clearly separating user input from the contents of a command,” reads the document, titled “Eliminating OS Command Injection Vulnerabilities.”

Still, OS command injection vulnerabilities remain prevalent, and have been at the center of recent attacks exploiting CVE-2024-20399, CVE-2024-3400 and CVE-2024-21887, which allowed hackers to execute code remotely on network edge devices, CISA and the FBI said.

Command injection attacks become possible when an application with insufficient input validation passes unsafe user-supplied data to a system shell, according to OWASP.

CEOs and business leaders should direct their tech leaders to analyze past occurrences of this type of vulnerability in their organizations’ products and prevent them going forward, according to the alert.

Specifically CISA and the FBI recommend that technical leaders:

- Make sure software preserves the intended syntax of commands and their arguments

- Assess their threat models

- Employ modern component libraries

- Conduct code reviews

- Subject their products to aggressive adversarial testing

For more information about preventing OS command injection vulnerabilities:

- “CWE-78: Improper Neutralization of Special Elements used in an OS Command ('OS Command Injection')” (MITRE)

- “Input Validation Cheat Sheet” (OWASP)

- “CVE-2024-3400: Zero-Day Vulnerability in Palo Alto Networks PAN-OS GlobalProtect Gateway Exploited in the Wild” (Tenable)

- “CVE-2023-46805, CVE-2024-21887: Zero-Day Vulnerabilities Exploited in Ivanti Connect Secure and Policy Secure Gateways (Tenable)

VIDEO

What is command injection? (PortSwigger)

2 - Study finds that SW developers lack cybersecurity skills

Software developers need more cybersecurity training and expertise.

That’s the main takeaway from the “Secure Software Development Education 2024 Survey” report from the Linux Foundation and the Open Source Security Foundation (OpenSSF).

Specifically, 28% of software developers polled said they’re not familiar with secure software development practices. The report, for which almost 400 software developers were surveyed, was conducted to determine software development education needs.

“Our survey has revealed significant gaps in the current state of secure software development knowledge and training among professionals. A substantial portion of developers, including those with extensive experience, lack familiarity with secure development practices,” the report reads.

Other key findings include:

- 69% of respondents rely on on-the-job experience as their main source of secure software development knowledge

- 53% haven’t taken a secure software development course

- Half of respondents cite lack of training as a major challenge

The report also found that developers’ training needs aren’t uniform. Rather, they vary based on roles and experience levels.

Also interesting: A majority of respondents view the security of emerging areas like AI, machine learning and supply chain as critical for future innovation.

Wondering what cybersecurity areas software developers should focus on? These are some topics that respondents recommend taking courses on:

- Automated security testing

- Defensive programming

- Supply chain security

- Software bill of materials

- Vulnerability analysis

- Secure API development

- Cloud computing configurations

To get more details, check out:

- The blog “Why are Organizations Struggling to Implement Secure Software Development?” from the Linux Foundation and OpenSSF

- The report “Secure Software Development Education 2024 Survey”

For more information about the importance of cybersecurity skills among developers:

- “CISA official: Comp Sci programs must add security courses” (Tenable)

- “NIST issues cybersecurity guide for AI developers” (IT World Canada)

- “7 Tips for Effective Cybersecurity Training for Developers” (DZone)

- “Tailor security training to developers to tackle software supply chain risks” (CSO)

- “We Must Consider Software Developers a Key Part of the Cybersecurity Workforce” (CISA)

3 - Data privacy and security among GenAI adoption challenges

Data privacy and data security rank high among the concerns of IT leaders in organizations that are adopting generative AI, according to the report “Generative AI: Strategies for a Competitive Advantage” from SAS Institute, which surveyed 1,600 IT decision makers globally.

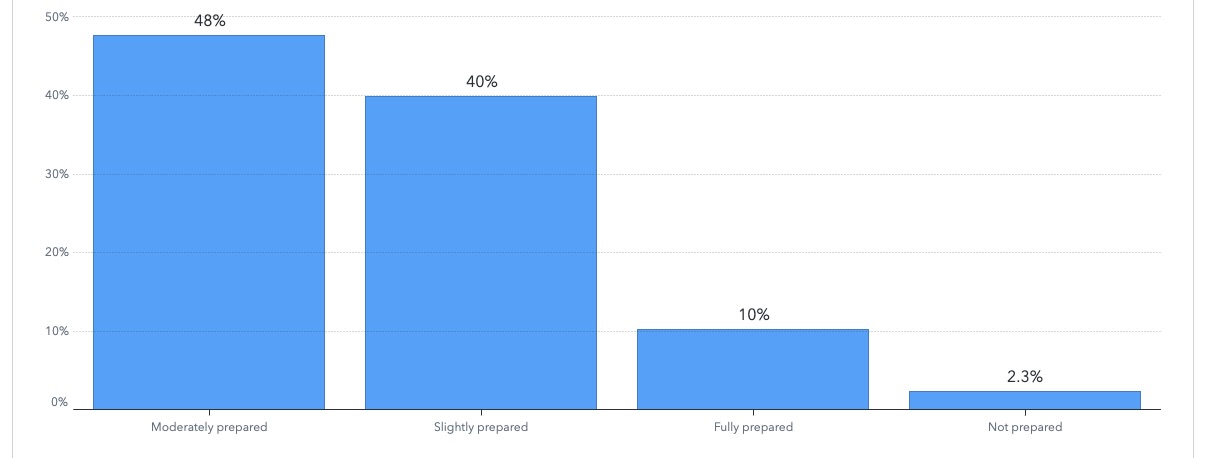

Specifically, 76% of respondents cited being concerned about data privacy, and 75% about data security. Meanwhile, only 10% feel “fully prepared” to comply with AI regulations, according to the report, which was released this week.

How prepared is your organization to comply with current and upcoming regulations concerning generative AI?

(Source: “Generative AI: Strategies for a Competitive Advantage” from SAS Institute, July 2024)

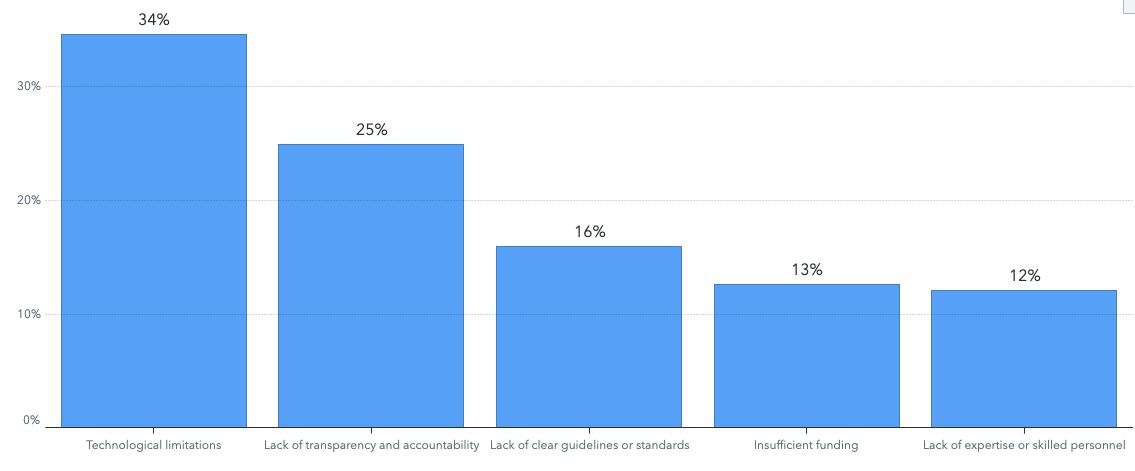

Cited by 34% of respondents, technical limitations ranked as the top challenge when implementing generative AI governance and monitoring. Lack of transparency and accountability (25%) came in second.

What’s your biggest challenge in implementing effective governance and monitoring for generative AI?

(Source: “Generative AI: Strategies for a Competitive Advantage” from SAS Institute, July 2024)

Other key findings from the report include:

- Only 9% of respondents feel “extremely familiar” with their organization’s adoption of generative AI, while 37% are “very familiar.”

- 61% of organizations have a usage policy for generative AI.

- 86% plan to invest in generative AI in the next financial year.

To get more details, check out:

- The report’s announcement

- Report highlights

- The full report

For more information about adopting generative AI securely and responsibly:

- “6 Best Practices for Implementing AI Securely and Ethically” (Tenable)

- “How businesses can responsibly adopt generative AI” (TechRadar)

- “7 generative AI challenges that businesses should consider” (TechTarget)

- “Implementing generative AI with speed and safety” (McKinsey)

- “AI is the talk of the town, but businesses are still not ready for it” (CNBC)

4 - Preventing attacks from China-sponsored APT40

Cybersecurity teams interested in learning how to protect their organizations from nation-state cybercrime group APT40 should check out a new advisory published this week.

The document, titled “People’s Republic of China (PRC) Ministry of State Security APT40 Tradecraft in Action,” summarizes recent APT40 activity in Australia; describes the group’s tactics, techniques and procedures; and details two attack case studies.

“The case studies are consequential for cybersecurity practitioners to identify, prevent and remediate APT40 intrusions against their own networks,” reads the advisory, which is based on incident response investigations conducted by the Australian Cyber Security Centre (ACSC).

A variety of law enforcement and cybersecurity agencies from Canada, Germany, Japan, New Zealand, South Korea, the U.S. and the U.K. collaborated with the ACSC. APT40 regularly looks for opportunities to attack networks in all of these countries. The group is particularly good at exploiting newly disclosed vulnerabilities quickly.

“Notably, APT40 possesses the capability to rapidly transform and adapt exploit proof-of-concept(s) (POCs) of new vulnerabilities and immediately utilise them against target networks possessing the infrastructure of the associated vulnerability,” the advisory reads.

To get more details, check out:

- The advisory “People’s Republic of China (PRC) Ministry of State Security APT40 Tradecraft in Action”

- The CISA alert “CISA and Partners join ASD’S ACSC to Release Advisory on PRC State-Sponsored Group, APT 40”

5 - FBI: Russia using AI tool to supercharge misinformation efforts

The RT News Network media organization, sponsored by the Russian government, has leveraged since early 2022 a covert artificial intelligence tool called Meliorator to intensify its production of misinformation.

Specifically, affiliates of RT News Network have used this AI software package to generate fake profiles on X (formerly Twitter) from multiple nationalities and spread disinformation that supports Russian government objectives.

That’s according to the FBI, which this week issued the advisory “State-Sponsored Russian Media Leverages Meliorator Software for Foreign Malign Influence Activity” in partnership with law enforcement agencies from Canada, the Netherlands and Poland.

The Meliorator software is capable of:

- Creating massive numbers of fake social-media personas that appear authentic

- Generating content that appears similar to the one posted by typical social media users

- Incorporating and relaying other bots' misinformation

- Amplifying existing false narratives

- Crafting messages that correspond with the bot’s profile characteristics

Although it only works on X, Meliorator will likely be adapted to other social media platforms, said the FBI, whose mitigation recommendations for social media platforms include:

- Implement processes to validate that accounts are created and operated by humans who respect the platform’s terms of use

- Review and update authentication and verification processes

- Adopt protocols to identify and flag suspicious users

- Make user accounts secure by default by, for example, requiring multi-factor authentication and adopting privacy settings by default

Meanwhile, the U.S. Justice Department (DOJ) announced the seizure of two domain names and a search warrant for almost 1,000 social media accounts used in RT News Network’s AI-powered social media bot farm. X suspended the bot accounts identified in the DOJ’s search warrant.

“Today’s actions represent a first in disrupting a Russian-sponsored Generative AI-enhanced social media bot farm,” FBI Director Christopher Wray said in a DOJ statement.

To get more details, check out:

- The DOJ’s announcement “Justice Department Leads Efforts Among Federal, International, and Private Sector Partners to Disrupt Covert Russian Government-Operated Social Media Bot Farm”

- The FBI’s advisory “State-Sponsored Russian Media Leverages Meliorator Software for Foreign Malign Influence Activity”

For more information about the threat of AI-powered misinformation to businesses:

- “Disinformation is harming businesses. Here are 6 ways to fight it” (CNN)

- “Deepfakes Pose Businesses Risks – Here's What To Know” (Booz Allen)

- “How to protect your business from deepfakes” (Bank of America)

- “Algorithms are pushing AI-generated falsehoods at an alarming rate. How do we stop this?” (The Conversation)

- “How to Protect Your Enterprise from Deepfake Damage” (InformationWeek)

6 - FedRAMP to expedite inclusion of GenAI tools in its marketplace

FedRAMP, the U.S. government program that certifies the security of cloud computing wares for use by federal agencies, is accelerating the evaluation of emerging technologies, including generative AI tools.

FedRAMP has published its Emerging Technology Prioritization Framework, which includes a list of generative AI products which will be the first batch of emerging technologies to be prioritized.

“The framework is designed to expedite the inclusion of emerging technologies in the FedRAMP Marketplace, so agencies can more easily use modern tools to deliver on their missions,” reads FedRAMP’s announcement of the framework.

Using the new framework, FedRAMP expects to initially prioritize up to 12 AI-based cloud services in these categories: chat interfaces; code-generation and debugging tools; prompt-based image generators; and APIs that provide these functions.

Vendors that offer these AI-based cloud services have until August 31, 2024 to request that their offerings be included in the initial round of prioritization by completing the Emerging Technology Cloud Service Offering Request Form and the Emerging Technology Demand Form.

- Cloud

- Exposure Management

- Risk-based Vulnerability Management

- Cloud

- Cybersecurity Snapshot

- Federal

- Government

- Risk-based Vulnerability Management