Cybersecurity Snapshot: How Enterprise Cyber Leaders Can Tame the ChatGPT Beast

Check out a guide written for CISOs by CISOs on how to manage the risks of using generative AI in your organization. Plus, the White House unveils an updated national AI strategy. Also, a warning about a China-backed attacker targeting U.S. critical infrastructure. And much more!

Dive into six things that are top of mind for the week ending May 26.

1 – How CISOs can mitigate the risks of generative AI

It’s a common scenario in enterprises today: The business adopts generative artificial intelligence (AI) tools like ChatGPT, while CISOs rush to draft usage policies to manage the security risks of using these newfangled AI products. Not ideal.

To help security leaders, venture capital firm Team8 has published “A CISO’s Guide: Generative AI and ChatGPT Enterprise Risks,” a 34-page document authored by its CISO-in-Residence Gadi Evron and Chief Innovation Officer Bobi Gilburd, in consultation with hundreds of security executives who belong to the company’s CISO Village group.

“Key questions CISOs are asking: Who is using the technology in my organization, and for what purpose? How can I protect enterprise information (data) when employees are interacting with GenAI? How can I manage the security risks of the underlying technology? How do I balance the security tradeoffs with the value the technology offers?,” reads the report.

Many questions indeed, but the authors have good news to share: CISOs can manage generative AI risks via an enterprise-wide policy developed in collaboration with all key stakeholders.

“This document’s recommendation is for enterprises to implement cross-organizational working groups to evaluate the risks, perform threat modeling exercises, and implement guardrails specific to their environment based on the risks highlighted,” the authors wrote.

Specific strategies suggested include:

- Identify risks and their potential impact

- Draft policies on who can use these tools and how, in order to mitigates the risks

- Choose generative AI providers with customizable security and policies

- Consider on-premises alternatives under your organization’s control

To get all the details, read the report, which is free to download.

For more information about using generative AI tools like ChatGPT securely and responsibly, check out these Tenable blogs:

- “CSA Offers Guidance on How To Use ChatGPT Securely in Your Org”

- “As ChatGPT Concerns Mount, U.S. Govt Ponders Artificial Intelligence Regulations”

- “As ChatGPT Fire Rages, NIST Issues AI Security Guidance”

- “A ChatGPT Special Edition About What Matters Most to Cyber Pros”

- “ChatGPT Use Can Lead to Data Privacy Violations”

VIDEO

CSO Executive Sessions with Charles Gillman, CISO at SuperChoice (CSO Online)

2 – White House updates AI research and development roadmap

And speaking of attempts to steer AI in the right direction, the Biden administration this week announced an update to the National AI R&D Strategic Plan, which was first published in 2016 and last revised in 2019.

The document, whose goal is to promote AI innovation without compromising people’s safety, rights and privacy, outlines nine core strategic priorities, the last of which is new in this updated version: To establish a principled and coordinated approach to international collaboration in AI research.

This new strategic priority seeks, among other things, to promote development and implementation of international guidelines and standards for AI, as well as foster international collaborations to use AI to tackle global problems in areas like healthcare and the environment.

The other eight strategic priorities are:

- Make long-term investments in responsible AI research, including to advance “foundational” AI capabilities and to measure and manage generative AI risks

- Better grasp how to create AI systems that complement and augment human capabilities, and mitigate the risk of human misuse of AI applications

- Understand, address and mitigate the legal, ethical and societal risks posed by AI

- Ensure that AI systems are safe, trustworthy, reliable and dependable

- Develop shared public datasets and environments for AI training and testing

- Measure and evaluate AI systems through standards and benchmarks

- Better understand the national AI R&D workforce needs

- Expand public-private partnerships to accelerate advances in AI

And that’s not all. The White House is fielding comments from the public to inform its national AI strategy until July 7.

For more information:

- “The White House's new AI initiatives focus on investment, education, and feedback” (Yahoo Finance)

- “White House Releases New AI National Frameworks, Educator Recommendations” (Nextgov)

- “White House Seeks Insight on Generative AI Use” (MeriTalk)

- “White House unveils new efforts to guide federal research of AI” (ABC News)

- “White House seeks public comment on national AI strategy” (TechTarget)

VIDEOS

Discussion of Artificial Intelligence (AI) Enabling Science and AI Impacts on Society (The White House)

White House releases AI framework to oversee development, risks (Yahoo Finance)

3 – Study: AI-refined phishing requires heightened fraud awareness

Bad actors have wasted no time using AI to make their phishing attempts – via email, text, social media, chat conversations and voice calls – appear alarmingly legitimate. In turn, people must boost their vigilance to another level.

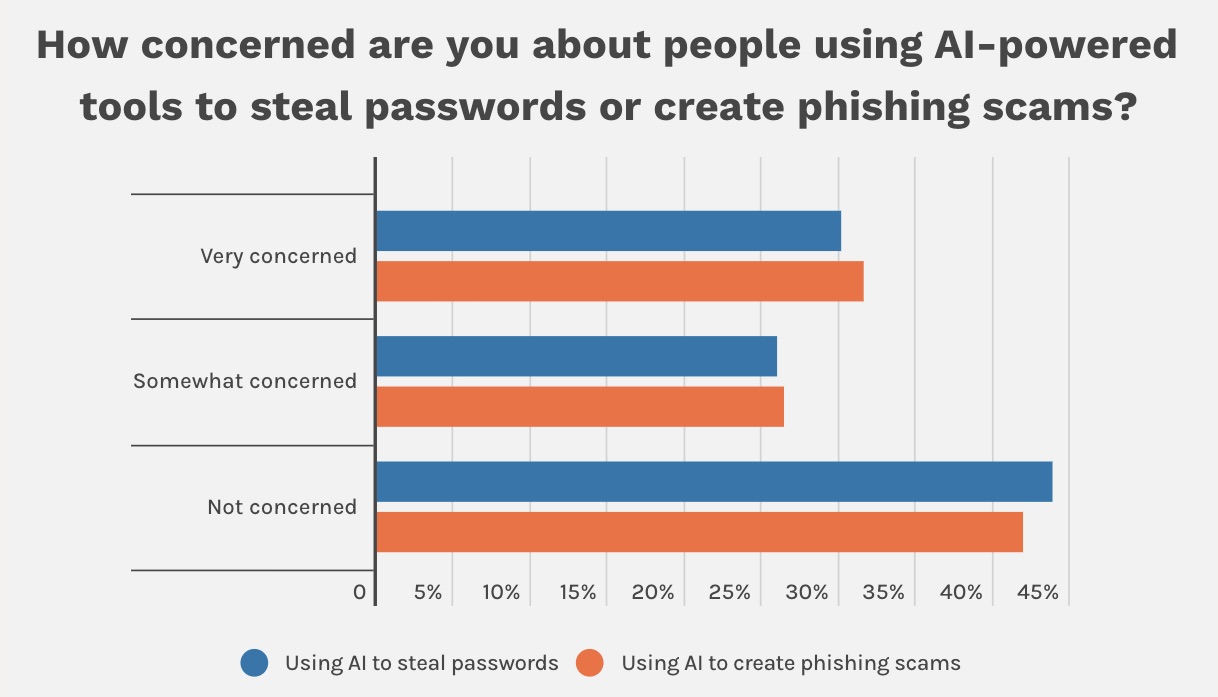

That’s the main takeaway from a recent survey of 1,000 cybersecurity professionals about how AI can help cybercrooks steal passwords more easily and effectively by enhancing their phishing communications.

Conducted by Pollfish and commissioned by Password Manager, a website that rates and compares password managers, the study prescribes an “overabundance of caution” and offers several recommendations, including:

- Conduct regular security awareness training to make employees knowledgeable about AI-powered phishing schemes

- Invest in AI defense tools that can sharpen an organization’s ability to detect AI-enhanced phishing communications

- Assume any unsolicited communication is potentially a scam, including voice messages from people you know, because fraudsters are using AI to clone people’s voices

- If you determine you should respond, contact the sender or organization directly via phone or email, instead of hitting “reply”

- Be aware of phishing bots in social media platforms, where they may appear and credibly behave as a real person

- Add a graphical icon or emoji to your name on social media sites, because phishing bots will include it in communications to you

Other findings from the survey:

- 56% of respondents worry about hackers using AI tools to swipe passwords

- 52% say AI makes it easier for fraudsters to steal information

- 58% say they are concerned about the use of AI to craft phishing attacks

(Source: Password Manager, May 2023)

To get more details, read the full report “1 in 6 Security Experts Say There’s a ‘High-Level’ Threat of AI Tools Being Used to Hack Passwords.”

For more information about AI and phishing:

- “7 guidelines for identifying and mitigating AI-enabled phishing campaigns” (CSO Online)

- “AI in Phishing: Do Attackers or Defenders Benefit More?” (Unite.ai)

- “AI and Cybersecurity: A New Era” (Morgan Stanley)

- “AI-Generated Tax Scams Can Look A Lot Like They Came From The IRS” (Forbes)

- “ChatGPT: A Fraud Fighter’s Friend or Foe?” (InsideBigData)

VIDEOS

AI can replicate voices in high-tech phone call scams (NBC News)

Mom warns of AI voice cloning scam that faked kidnapping (ABC News)

4 – Microsoft: Business email compromise incidents skyrocket

Business email compromise (BEC) attacks jumped in the past year, as threat actors use new and more sophisticated techniques to carry out this type of social engineering scheme, which is aimed at duping employees into disclosing confidential employer data or transferring funds.

That’s according to the latest Cyber Signals report from Microsoft’s Threat Intelligence team, which detected and investigated a whopping 35 million BEC attempts in the 12 months ended in April 2023 – about 156,000 per day.

In addition, Microsoft detected a significant jump in the use of cybercrime-as-a-service (CaaS) tools for BEC, such as the BulletProftlink platform, which offers attackers an end-to-end service, including templates and hosting. These CaaS BEC attacks rose 38% between 2019 and 2022.

Recommendations for cybersecurity teams include:

- Leverage email security settings, such as features that flag messages from external and/or unverified senders and block them if their identities can’t be confirmed

- Look for email systems that offer advanced phishing protections via the use of AI and machine learning

- Turn on multi-factor authentication for email accounts

- Train employees to spot fraudulent emails, and make them aware of the consequences of BEC scams

- Require employees to verify the identity of organizations and individuals via a phone call before proceeding with a transaction or a request for sensitive information

To get more details, read the Cyber Signals report “The Confidence Game: Shifting Tactics Fuel Surge in Business Email Compromise” and a Microsoft blog about it, and check out coverage from CSO Online, Infosecurity Magazine, DarkReading and Cybersecurity Dive.

5 – Advisory: China sponsors stealthy, persistent attacks against critical infrastructure

Critical infrastructure networks are being silently breached by an attacker that lingers undetected and that’s backed by the Chinese government, according to a joint cybersecurity advisory from the U.S., U.K., Australian, Canadian and New Zealand governments.

The advisory, issued this week, details how the cyber actor, called Volt Typhoon, uses “living off the land” techniques to avoid detection. For example, it uses legitimate network administration tools to “blend in” with victims’ normal system and network activities.

(For a detailed take on Volt Typhoon, read the Tenable Security Response Team (SRT) blog “Volt Typhoon: International Cybersecurity Authorities Detail Activity Linked to Chinese-State Sponsored Threat Actor.”)

The advisory, published by the U.S. Cybersecurity and Infrastructure Security Agency (CISA), outlines the tactics, techniques and procedures used by Volt Typhoon and explains how organizations can hunt for and mitigate the cyber actor’s malicious activity on their network.

“Private sector partners have identified that this activity affects networks across U.S. critical infrastructure sectors, and the authoring agencies believe the actor could apply the same techniques against these and other sectors worldwide,” reads the 24-page advisory.

Volt Typhoon focuses on spying, thus its emphasis on stealthy, persistent breaches, and its current efforts are likely aimed at eventually disrupting critical communications infrastructure between the U.S. and Asia, Microsoft’s Threat Intelligence team said in a blog post about its own research findings.

Active since mid-2021, Volt Typhoon has targeted critical infrastructure organizations across multiple sectors including communications, manufacturing, utilities, transportation, construction, government, technology and education, and in particular has focused on Guam, according to Microsoft. Guam, a U.S. island territory in the Western Pacific, has U.S. military bases.

To get more details, check out the full advisory “People's Republic of China State-Sponsored Cyber Actor Living off the Land to Evade Detection" and CISA’s announcement, along with coverage from Help Net Security, CyberScoop, Axios, Dark Reading and Bleeping Computer, as well as the aforementioned Tenable SRT blog.

VIDEOS

China accused of spying on critical US infrastructure (BBC News)

Microsoft detects China-based cyberattacks targeting U.S. (Yahoo Finance)

6 - U.S. government updates ransomware guide

The #StopRansomware Guide has gotten its first update, including new recommendations, resources and tools that can help prevent, detect, respond to and recover from attacks that employ advanced tactics and techniques developed since the document’s publication in 2020.

That’s the word this week from CISA, the Federal Bureau of Investigation (FBI), the National Security Agency (NSA), and the Multi-State Information Sharing and Analysis Center (MS-ISAC.)

“With our FBI, NSA and MS-ISAC partners, we strongly encourage all organizations to review this guide,” said CISA’s Executive Assistant Director for Cybersecurity Eric Goldstein in a statement.

Here’s some of what’s new in the guide:

- Added recommendations for preventing common initial infection vectors, including compromised credentials and advanced forms of social engineering

- Updated tips to address cloud backups and zero trust architecture (ZTA)

- Expanded the response checklist with threat hunting tips for detection and analysis

- Mapped recommendations to CISA’s Cross-Sector Cybersecurity Performance Goals (CPGs).

To get more details, read the joint announcement from CISA, the FBI, the NSA and MS-ISAC, dive into the full, updated guide and check out coverage from NextGov, TechTarget and SDxCentral.

- Active Directory

- Cloud

- Malware & Malicious Behavior

- Cybersecurity Snapshot

- Exposure Management

- Risk-based Vulnerability Management