Cybersecurity Snapshot: NIST Program Assesses How AI Systems Will Behave in the Real World, While FBI Has Troves of Decryption Keys for LockBit Victims

Check out the new ARIA program from NIST, designed to evaluate if an AI system will be safe and fair once it’s launched. Plus, the FBI offers to help LockBit victims with thousands of decryption keys. In addition, Deloitte finds that boosting cybersecurity is key for generative AI deployment success. And why identity security is getting harder. And much more!

Dive into six things that are top of mind for the week ending June 7.

1 - NIST program will test safety, fairness of AI systems

Will that artificial intelligence (AI) system now in development behave as intended once it’s released or will it go off the rails?

It’s a critical question for vendors, enterprises and individuals developing AI systems. To help answer it, the U.S. government has launched an AI testing and evaluation program.

Called Assessing Risks and Impacts of AI (ARIA), the National Institute of Standards and Technology (NIST) program will make a “sociotechnical” assessment of AI systems and models.

That means ARIA will determine whether an AI system will be valid, reliable, safe, secure, private and fair once it’s live in the real world.

“In order to fully understand the impacts AI is having and will have on our society, we need to test how AI functions in realistic scenarios – and that’s exactly what we’re doing with this program,” U.S. Commerce Secretary Gina Raimondo said in a statement.

The program, now in version 0.1, goes beyond assessments of system performance and accuracy, and aims instead at measuring what NIST calls “technical and societal robustness.” Its evaluations will include model testing, red teaming and field testing.

To get more details, check out:

- NIST’s announcement “NIST Launches ARIA, a New Program to Advance Sociotechnical Testing and Evaluation for AI”

- The ARIA program’s home page

- The ARIA FAQ

For more information about ethical and secure AI systems:

- “6 Best Practices for Implementing AI Securely and Ethically” (Tenable)

- “What is responsible AI?” (TechTarget)

- “How to Implement AI — Responsibly” (Harvard Business Review)

- “Building a responsible AI: How to manage the AI ethics debate” (International Organization for Standardization)

- “AI Safety vs. AI Security: Navigating the Commonality and Differences” (Cloud Security Alliance)

2 - FBI has thousands of LockBit decryption keys, urges victims to reach out

Victims of the LockBit ransomware-as-a-service group should contact the FBI’s Internet Crime Complaint Center (IC3), because the agency has more than 7,000 LockBit decryption keys.

Using the keys, the FBI “can help victims reclaim their data and get back online,” FBI Cyber Division Assistant Director Bryan Vorndran said this week at a conference in Boston.

The FBI was part of a multinational operation dubbed Cronos that severely disrupted LockBit in February and yielded an initial batch of more than 1,000 decryption keys.

The LockBit ransomware variant has been used in more than 2,400 attacks globally, including more than 1,800 in the U.S., resulting in billions of dollars in damages, Vorndran said.

As part of Operation Cronos, investigators discovered that the LockBit group and its affiliates held on to ransomed data even after receiving payment and claiming to have deleted it.

To get more details, check out:

- The FBI announcement “FBI Cyber Lead Urges Potential LockBit Victims to Contact Internet Crime Complaint Center”

- Vorndran's prepared remarks at the 2024 Boston Conference on Cyber Security

For more information about ransomware prevention, trends and best practices:

- “How Can I Protect Against Ransomware?” (CISA)

- “The Ransomware Ecosystem” (Tenable)

- “Preventing Ransomware Attacks at Scale” (Harvard Business Review)

- “How to prevent ransomware in 6 steps” (TechTarget)

- “How to Prevent and Recover from Ransomware” (National Cybersecurity Alliance)

3 - Deloitte: Cybersecurity key to GenAI’s success

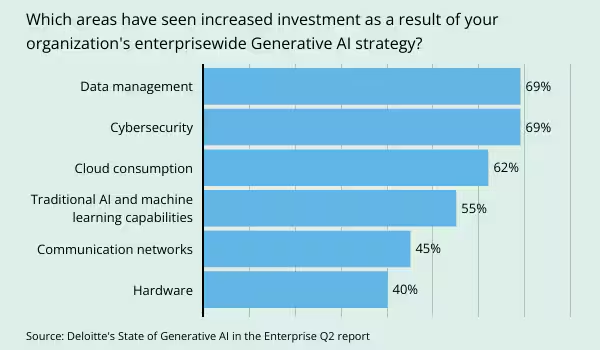

For their generative AI deployments to succeed, organizations must simultaneously beef up three critical technology areas, including cybersecurity.

That’s according to Deloitte’s “State of Generative AI in the Enterprise” report for Q2, based on a survey of almost 2,000 business and technology leaders whose organizations are advanced AI users.

The other two areas are data management and cloud consumption, according to Deloitte, which forecasts that enterprise spending on generative AI will increase by 30% this year.

“These three capabilities, each important in their own right, form a constellation that can create even greater impact—in this case, as enablers of gen AI,” reads a Deloitte article about the report.

To get more details, check out:

- Deloitte’s article “Gen AI investment opportunities center on data, cybersecurity, and cloud, Deloitte survey finds”

- Deloitte’s “State of Generative AI in the Enterprise” report for Q2

4 - IDSA: Securing identities gets more challenging

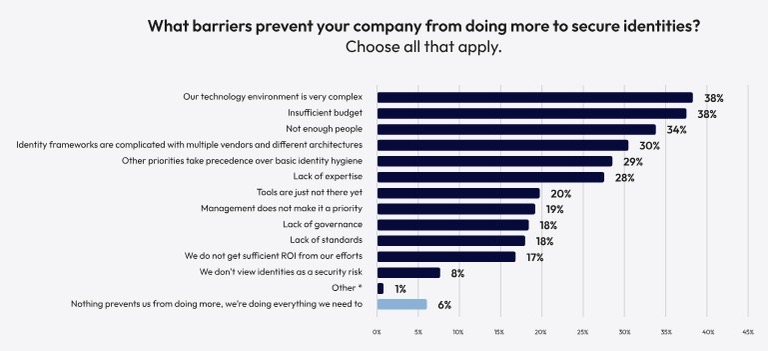

Is securing identities at your organization becoming more complex and a higher priority, as identity incidents rise? If so, you’re not alone.

In the past year, 90% of U.S. organizations suffered an identity-related incident, with 84% reporting a direct business impact, according to the Identity Defined Security Alliance’s “2024 Trends in Identity Security” study, which surveyed 521 identity and security pros at U.S. organizations with more than 1,000 employees.

"We continue to see that securing these identities remains a significant challenge, and security outcomes remain a large work in progress,” reads the report.

Key findings from the report include:

- Organizations where managing and securing identities is the top priority of their security programs grew from 17% last year to 22%. It’s a top 3 priority in 73% of organizations, up from 61% in 2023.

- Managing identity sprawl is a major focus for 57% of respondents.

- The most common identity-related incident faced by respondents was phishing (cited by 69%).

- Most respondents (96%) expect that AI will help their organizations secure their identities, especially for identifying anomalous behavior and assessing alert severity.

And what was the top barrier to securing identities? A very complex tech environment.

(Source: Identity Defined Security Alliance’s “2024 Trends in Identity Security,” May 2024)

To get more details, read:

- The study’s announcement

- The study “2024 Trends in Identity Security”

For more information about identity and access management (IAM) security, check out these Tenable resources:

- “Identities: The Connective Tissue for Security in the Cloud” (blog)

- “Managing Security Posture and Entitlements in the Cloud” (on-demand webinar)

- “Strengthen the Effectiveness of Your Identity Security Program with Zero Trust Maturity” (on-demand webinar)

- “Why You Need Contextual Intelligence in the Age of Identity-First and Zero Trust Security, and How to Get It Now” (on-demand webinar)

- “The MGM Breach and the Role of Identity Providers in Modern Cyber Attacks” (blog)

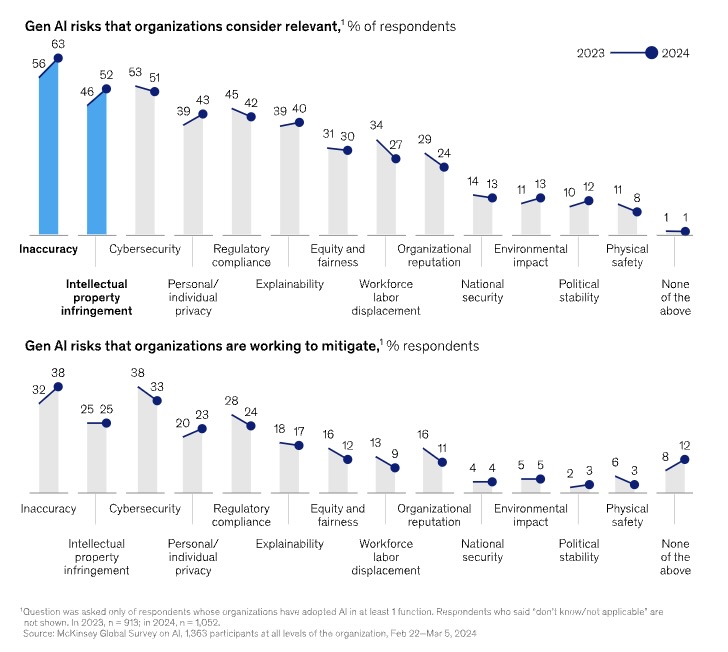

5 - McKinsey: Inaccuracy tops orgs’ GenAI concerns

Over the past year, organizations have become more worried about the erratic information produced by their generative AI systems, viewing it now as a key risk they must manage.

That’s according to the “McKinsey Global Survey on AI,” which polled almost 1,400 respondents representing a broad range of roles, industries, company sizes and experience.

“Inaccuracy – which can affect use cases across the gen AI value chain, ranging from customer journeys and summarization to coding and creative content – is the only risk that respondents are significantly more likely than last year to say their organizations are actively working to mitigate,” reads the McKinsey report about the survey.

Almost a quarter of respondents using generative AI said their organizations have suffered a negative impact from the inaccuracy of their generative AI systems. That ranks inaccuracy at the top of generative AI risks with negative consequences, followed by cybersecurity, explainability and intellectual property infringement.

Other study findings include:

- 65% of organizations polled are using generative AI “regularly,” almost double the percentage from last year’s survey.

- Concrete benefits from generative AI use include lower costs, especially for human resources; and revenue boosts, particularly in supply chain management.

- Respondents are using a mix of commercial off-the-shelf generative AI tools (53%), and custom-built in-house systems (47%).

To get more details, check out the McKinsey report “The state of AI in early 2024: Gen AI adoption spikes and starts to generate value.”

For more information about inaccuracy issues in generative AI systems:

- “Hallucinations: Why AI Makes Stuff Up, and What's Being Done About It” (Cnet)

- “AI hallucinations: The 3% problem no one can fix slows the AI juggernaut” (Silicon Angle)

- “AI Hallucinations: The Emerging Market for Insuring Against Generative AI's Costly Blunders” (Cloud Security Alliance)

- “Legal GenAI tools mislead 17% of time: Stanford study” (Legal Dive)

VIDEO

Why AI hallucinations are here to stay (TechTalk)

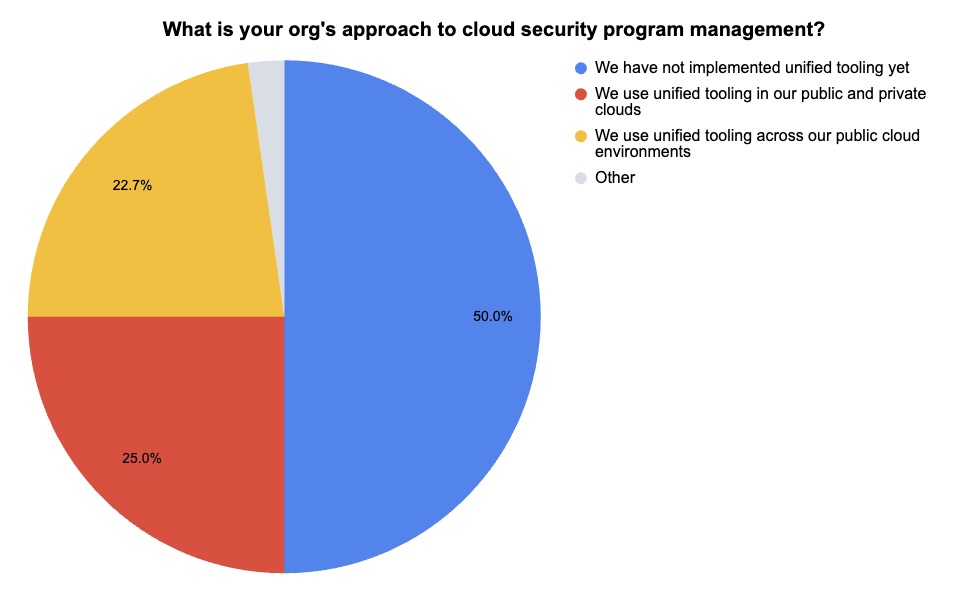

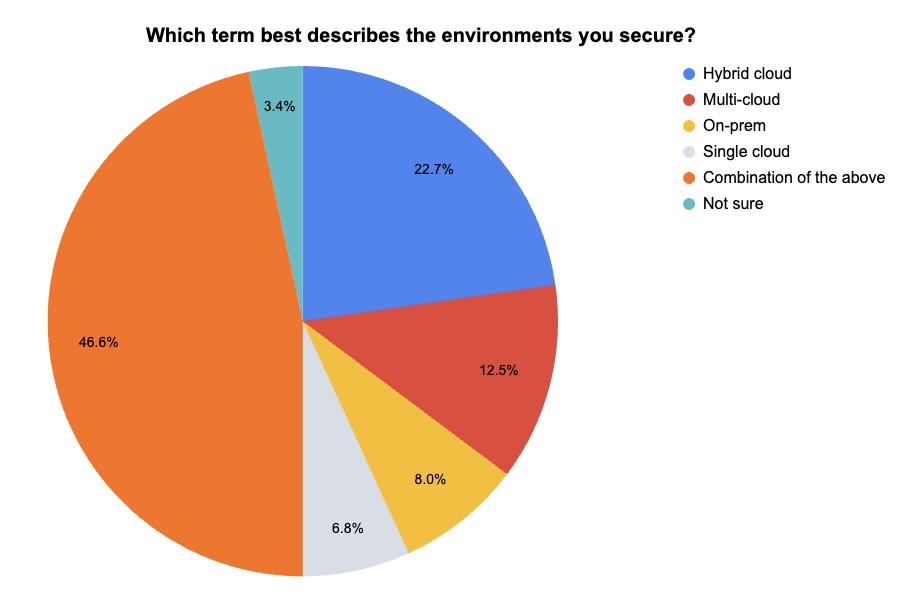

6 - Tenable poll takes the pulse of cloud sec strategies

During our recent webinar “Secure Your Cloud-Native Applications: 5 Key Considerations for Improving the Impact and Efficiency of Your Efforts,” we polled attendees about the environments they secure and about their cloud security program management. Check out what they said!

(56 webinar attendees polled by Tenable, May 2024)

(88 webinar attendees polled by Tenable, May 2024)

To learn about how to boost the security of your cloud-native applications in hybrid and multi-cloud environments, tune into the on-demand webinar “Secure Your Cloud-Native Applications: 5 Key Considerations for Improving the Impact and Efficiency of Your Efforts.”

- Cloud

- Risk-based Vulnerability Management

- Cloud

- Cybersecurity Snapshot

- Exposure Management

- Federal

- Government