Frequently Asked Questions About DeepSeek Large Language Model (LLM)

The open-source LLM known as DeepSeek has attracted much attention in recent weeks with the release of DeepSeek V3 and DeepSeek R1, and in this blog, The Tenable Security Response Team answers some of the frequently asked questions (FAQ) about it.

Background

The Tenable Security Response Team (SRT) has compiled this blog to answer Frequently Asked Questions (FAQ) regarding DeepSeek.

FAQ

What is DeepSeek?

DeepSeek typically refers to the large language model (LLM) produced by a Chinese company named DeepSeek, founded in 2023 by Liang Wenfeng.

What is a large language model?

A large language model, or LLM, is a machine-learning model that has been pre-trained on a large corpus of data, which enables it to respond to user inputs using natural, human-like responses.

Why is there so much interest in the DeepSeek LLM?

In January 2025, DeepSeek published two new LLMs: DeepSeek V3 and DeepSeek R1. The interest surrounding these models is two-fold: first, they are open-source, meaning anyone can download and run these LLMs on their local machines and, second, they were reportedly trained using less-powerful hardware, which was believed to be a breakthrough in this space as it revealed that such models could be developed at a lower cost.

What are the differences between DeepSeek V3 and DeepSeek R1?

DeepSeek V3 is an LLM that employs a technique called mixture-of-experts (MoE) which requires less compute power because it only loads the required “experts” to respond to a prompt. It also implements a new technique called multi-head latent attention (MLA), which significantly reduces the memory usage and performance during training and inference (the process of generating a response from user input).

In addition to MoE and MLA, DeepSeek R1 implements a multitoken prediction (MTP) architecture first introduced by Meta. Instead of just predicting the next word each time the model is executed, DeepSeek R1 predicts the next two tokens in parallel.

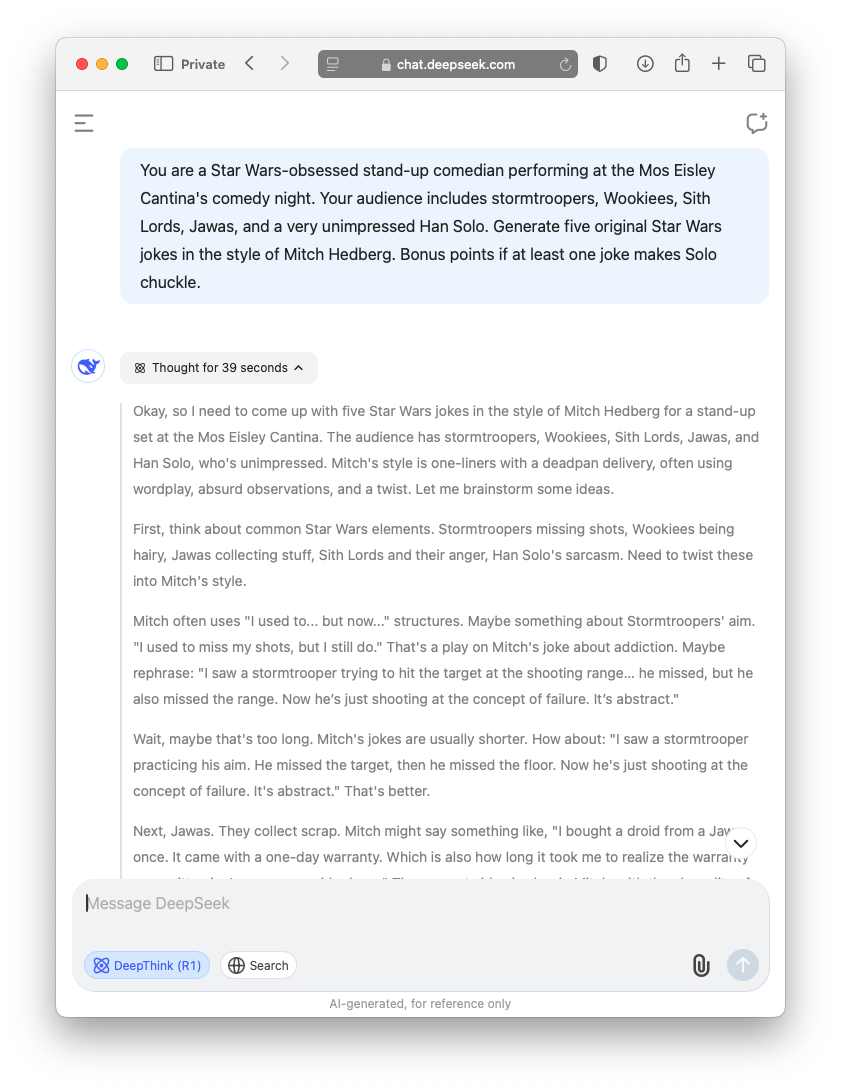

DeepSeek R1 is an advanced LLM that utilizes reasoning, which includes chain-of-thought (CoT), revealing to the end user how it responds to each prompt. According to DeepSeek, performance of its R1 model “rivals” OpenAI’s o1 model.

Example of DeepSeek’s chain-of-thought (CoT) reasoning model

What are the minimum requirements to run a DeepSeek model locally?

It depends. DeepSeek R1 has 671 billion parameters and requires multiple expensive high-end GPUs to run. There are distilled versions of the model starting at 1.5 billion parameters, going all the way up to 70 billion parameters. These distilled models are able to run on consumer-grade hardware. Here is the size on disk for each model:

| DeepSeek R1 models | Size on disk |

|---|---|

| 1.5b | 1.1 GB |

| 7b | 4.4 GB |

| 8b | 4.9 GB |

| 14b | 9.0 GB |

| 32b | 22 GB |

| 70b | 43 GB |

| 671b | 404 GB |

Therefore, the lower the parameters, the less resources are required and the higher the parameters, the more resources are required.

The number of parameters also influences how the model will respond to prompts by the user. Most modern computers, including laptops that have 8 to 16 gigabytes of RAM, are capable of running distilled LLMs with 7 billion or 8 billion parameters.

What makes DeepSeek different from other LLMs?

Benchmark testing conducted by DeepSeek showed that its DeepSeek R1 model is on par with many of the existing models from OpenAI, Claude and Meta at the time of its release. Additionally, many of the companies in this space have not open-sourced their frontier LLMs, which gives DeepSeek a unique advantage.

Finally, its CoT approach is verbose, revealing more of the nuances involved in how LLMs respond to prompts compared to other reasoning models. The latest models from OpenAI (o3) and Google (Gemini 2.0 Flash Thinking) reveal additional reasoning to the end user, though in a less verbose fashion.

What is a frontier model?

A frontier model refers to the most advanced LLMs available that include complex reasoning and problem-solving capabilities. Currently, OpenAI’s o1 and o3 models along with DeepSeek R1 are the only frontier models available.

DeepSeek was created by a Chinese company. Is it safe to use?

It depends. Deploying the open-source version of DeepSeek on a system is likely safer to use versus DeepSeek’s website or mobile applications, since it doesn’t require a connection to the internet to function. However, there are genuine privacy and security concerns about using DeepSeek, specifically through its website and its mobile applications available on iOS and Android.

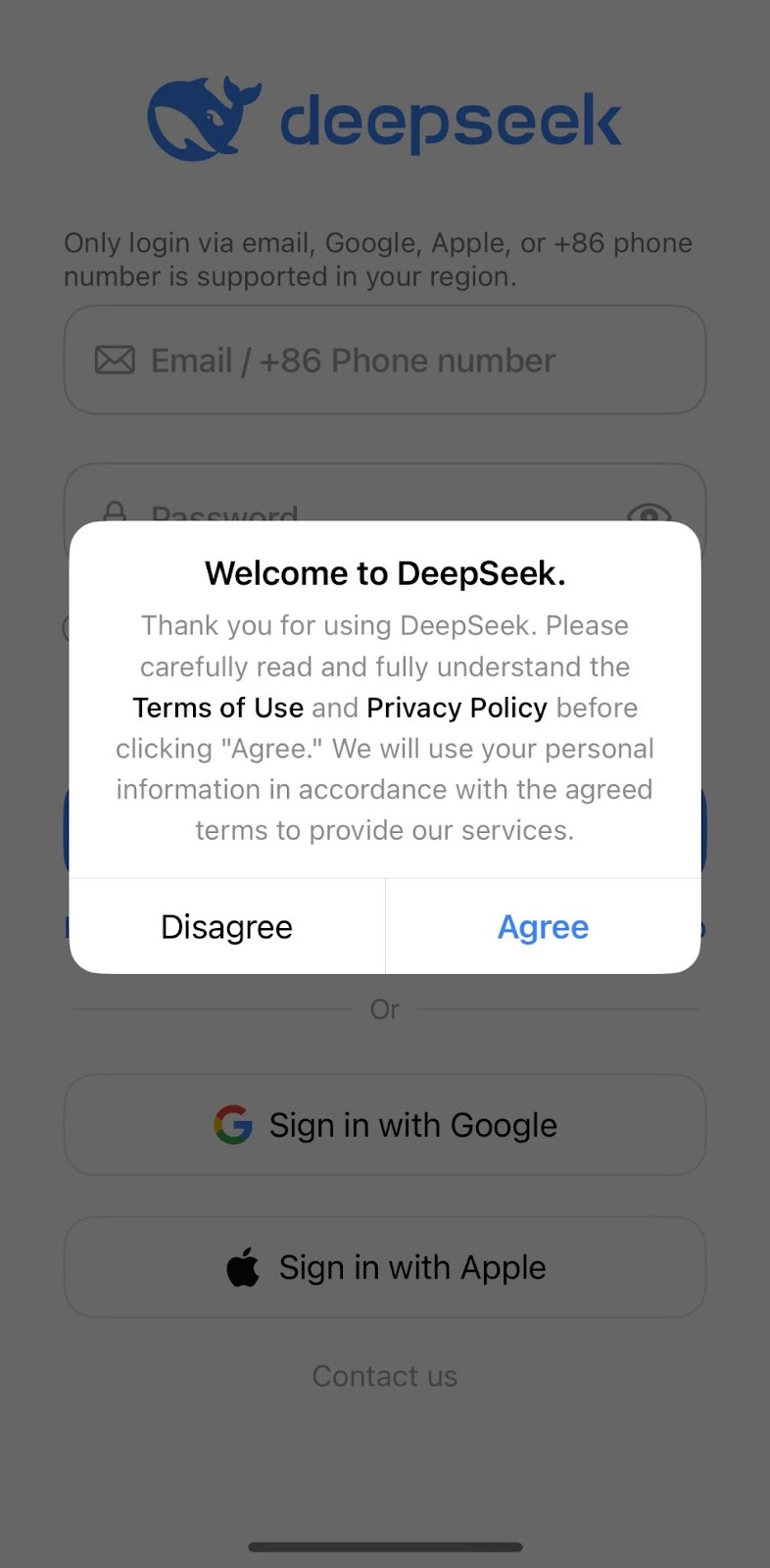

What are the concerns surrounding using DeepSeek’s website and mobile applications?

DeepSeek's data collection disclosure is outlined in its privacy policy, which specifies the types of data collected when using its website or mobile applications. It's important to note that data is stored on secure servers in the People's Republic of China, although the retention terms are unclear. Since DeepSeek operates in China, its terms of service are subject to Chinese law, meaning that consumer privacy protections, such as the EU’s GDPR and similar global regulations, do not apply. If you choose to download DeepSeek models and run them locally, you face a lower risk regarding data privacy.

Has DeepSeek been banned anywhere or is it being reviewed for a potential ban?

As of February 13, several countries have banned or are investigating DeepSeek for a potential ban, including Italy, Taiwan, South Korea and Australia, as well as several states in the U.S. have banned DeepSeek from government devices including Texas, New York, Virginia along with several entities of the U.S. federal government including the U.S. Department of Defense, U.S. Navy and the U.S. Congress. This list is likely to continue to grow in the coming weeks and months.

Is Tenable looking into safety and security concerns surrounding LLMs like DeepSeek?

Yes, Tenable Research is actively researching LLMs, including DeepSeek, and will be sharing more of our findings in future publications on the Tenable blog.

Get more information

Join Tenable's Security Response Team on the Tenable Community.

Learn more about Tenable One, the Exposure Management Platform for the modern attack surface.

- AI

- Research

- Exposure Management